A Simple, Funny Explanation for Grandma

So, what are AI Hallucinations? Artificial intelligence is impressive, helpful, but sometimes completely wrong. Not because it’s trying to fool you, but because it occasionally acts like an overeager child who really wants to give you an answer—even if it has to guess.

So, what are AI Hallucinations? Artificial intelligence is impressive, helpful, but sometimes completely wrong. Not because it’s trying to fool you, but because it occasionally acts like an overeager child who really wants to give you an answer—even if it has to guess.

AI hallucinations occurs when a Large Language Model (like ChatGPT, Grok, or Gemini) generates information that is factually incorrect, illogical, or entirely fabricated, but presents it with 100% confidence.

illogical, or entirely fabricated, but presents it with 100% confidence.

Unlike a search engine that retrieves existing data, generative AI predicts the next word in a sentence based on probability and patterns. Occasionally, the model prioritizes “fluency” (making a sentence look good) over “accuracy” (making the sentence true).

Think of it this way: It is like a super-advanced autocomplete that sometimes fills in the blanks with a convincing lie because it fits the pattern of the sentence.

Here’s the simplest explanation possible for why AI “hallucinates,” written so an 80-year-old grandmother could read it with her morning coffee and say, “Well, now THAT makes sense.”

What Is an AI Hallucination?

An AI hallucination is when a computer gives you an answer that sounds right but is completely made up.

Think of it like this:

Grandma: “What’s the capital of Kansas?”

Your 5-year-old grandchild: “Banana City!”

Said with so much confidence you almost believe it.

The child isn’t lying—they just don’t know, so they guess.

AI does the same thing, but with fancier vocabulary.

Why Does AI Make Things Up?

Because of how it works under the hood. Here’s a humorous, breakdown:

1. AI Predicts Words, Not Truth

AI doesn’t know things—it predicts things.

It decides what word should come next, like a supercharged version of autocorrect.

If it doesn’t have the right information, it fills in the blank, just like you might when telling a story from 1949 that you can’t fully remember.

And yes, it tells its stories with the same confidence Uncle Bob uses when explaining why he “almost made the NFL.”

2. Missing Memories = Wild Guesses

AI learns from huge piles of information, but it hasn’t read everything.

So if it hits a topic it doesn’t know, it tries its best and sometimes invents details.

It’s basically saying:

“I don’t know this one… but I’m gonna take a swing!”

This is where fake facts, fake books, and fake quotes show up.

3. It Really Wants to Be Helpful (Sometimes Too Helpful)

AI has been trained to be polite and helpful.

So instead of saying, “I don’t know,” it sometimes gives you an answer anyway.

Kind of like when you ask your husband where the scissors are, and he confidently tells you:

“They’re in the drawer!”

(They are not in the drawer.)

4. Vague Questions Confuse It

If you ask a fuzzy question, AI has to guess what you want, and creates an AI Hallucination.

Imagine this:

You: “What did Mary say the other day?”

Grandma: “Mary WHO? Mary next door? Mary from church? Mary that stole my Tupperware in ’82?”

AI feels the same confusion.

But instead of asking for clarity, it tries to fill in the gaps.

5. It Doesn’t Know New Stuff

AI has a “last time I studied” date in its brain.

Anything that happened after that date? It has no idea. This create an AI Hallucination!

Ask it about brand-new events, and it’ll guess like someone who hasn’t watched the news since 1973.

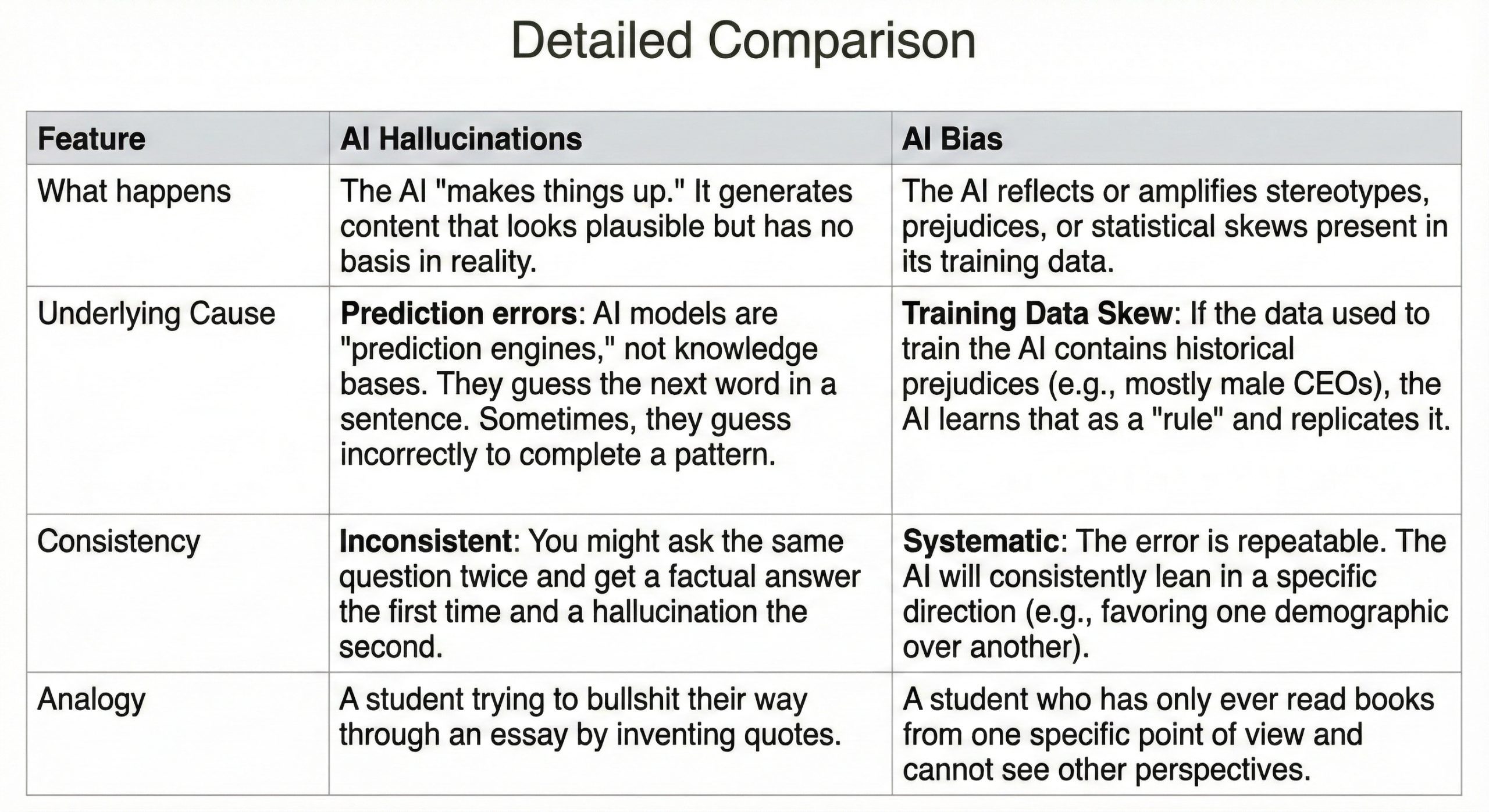

AI Hallucinations and Bias Are Not the Same

The Core Difference

- AI Hallucination is a failure of fact. The AI confidentially invents information that is not real (e.g., citing a court case that never happened).

- AI Bias is a failure of fairness or representation. The AI produces results that are systematically skewed, prejudiced, or discriminatory (e.g., consistently showing images of men when asked for a “doctor”).

Where AI Hallucinations Show Up

Here are the greatest hits—the places AI is MOST likely to make things up:

1. Fake Book Titles & Fake Studies

Ask AI for a scientific study it hasn’t heard of, and it might invent:

“According to a 1997 paper by Dr. Picklebottom…”

Dr. Picklebottom is not real. This is an AI Hallucination.

2. Confident Medical or Legal Answers

AI talks like a doctor or lawyer even when it shouldn’t.

It doesn’t have a license—it barely has good manners.

3. Made-Up Numbers

If you ask:

“How many people knit sweaters in Nebraska?”

AI might say:

“22,945 people.”

This number is 100% nonsense.

4. Wrong Image Descriptions

Sometimes it thinks a dog is a potato.

We’ve all been there.

Can We Get Rid of Hallucinations Completely?

Nope.

Not unless we want AI to become so boring and cautious that it answers everything with:

“Maybe. Possibly. Not sure. Ask someone else.”

AI will always guess sometimes because that’s how it works—it’s designed to give answers, not sit silently like a teenager at Thanksgiving.

But we can reduce hallucinations.

How Companies Try to Reduce Hallucinations

Here’s the simple version:

1. Give AI a Library (RAG)

A “library (RAG)” means giving AI a real set of documents to look things up in, so it doesn’t have to make answers up. The technical term is RAG = Retrieval-Augmented Generation. But that sounds like a robot with a fancy college degree, so here’s the simple version. Let it look things up before answering.

Imagine AI is a very smart high school or college student, business owner, or yourself.

But no matter how smart, people sometimes guess when asked a question.

Now imagine you created a library:

-

books, notes, PDFs

-

articles, websites

Now, you simply instruct the AI: “Go to the Library before anwerting.”

2. Teach It to Say “I Don’t Know”

Harder than it sounds.

AI is like some family members. It hates admitting when it’s confused.

3. Add Guardrails

Tell it:

-

“Don’t guess.”

-

“Stay within these facts.”

-

“Stop making up Dr. Picklebottom.”

4. Improve Its Training

Feed it better information—kind of like giving a kid more vegetables so they stop thinking fries are a food group.

How YOU Can Reduce AI Hallucinations

Here’s the cheat sheet:

-

Ask clear questions.

-

Ask for real sources.

-

Tell it not to guess.

-

Ask for confidence levels (“How sure are you?”).

-

Tell it to ask you clarifying questions.

-

Tell it to only use facts it can verify.

Basically, talk to it like you’re training a helpful—but slightly confused—assistant.

Simple Summary for Grandma

AI doesn’t “think.”

It predicts words.

When it doesn’t know something, it guesses.

Sometimes those guesses are silly, wrong, or completely made up.

It’s not lying.

It’s not trying to fool you.

It’s just trying REALLY hard to help… sometimes too hard.

Like a polite grandchild who says,

“Yes, Grandma, I DEFINITELY know how to fix your TV!”

and then promptly breaks the remote.

Conclusion-AI Hallucinations

If you use AI for work, research, or creative writing, you must understand AI hallucinations to protect yourself from the following risks:

-

The “Confidence” Trap: AI does not sound unsure when it is wrong. It will invent court cases, historical dates, or medical advice with the same authoritative tone it uses for facts. Readers need to know that plausibility does not equal truth.

-

Reputational Risk: If a professional uses AI to write a report or article and fails to verify the claims, they risk publishing embarrassing falsehoods (e.g., the real-world case where a lawyer cited non-existent legal precedents invented by ChatGPT).

-

The Shift from User to Editor: Understanding AI hallucinations changes how a person interacts with AI. Stop treating the AI as an oracle and start treating it as a junior intern—capable and fast, but requiring strict fact-checking before anything is finalized.

The Bottom Line: AI is a Predictor, Not a Professor

Artificial Intelligence is a powerful tool, but it is important to remember that it is fundamentally a prediction engine, not a fact repository. It doesn’t “know” the truth; it simply calculates which word is most likely to come next to create a fluent, convincing sentence. When it lacks data, it prioritizes being helpful over being honest, leading to confident fabrications known as hallucinations.

In short, enjoy the speed and creativity of AI, but always keep your skepticism handy. As the article suggests, it’s like that enthusiastic grandchild—eager to please, but occasionally prone to making up stories just to keep the conversation going.

Sources and Further Reading

These are written for regular humans—no engineering degree needed:

MIT Technology Review – Why AI Makes Stuff Up

https://www.technologyreview.com/2023/05/09/

Nature – The Truth About AI Hallucinations

https://www.nature.com/articles/d41586-023-02228-3

OpenAI – Understanding Model Limitations

https://platform.openai.com/docs/guides/safety-best-practices

Anthropic – Why Language Models Get Things Wrong

https://www.anthropic.com/index/safety

Stanford CRFM – Easy Reading on AI Behavior

https://crfm.stanford.edu/